Garner Better Results By A/B Testing your Campaigns

Contents

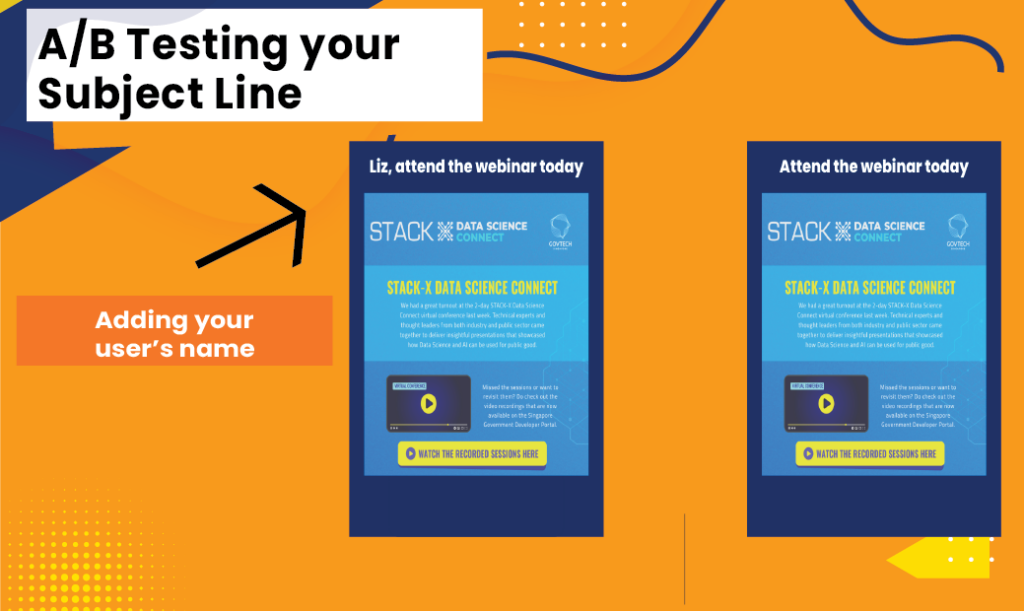

One way you can test your subject line is by personalising it to include your subscribers’ name vs. a more general subject line to see which works better for your recipients.

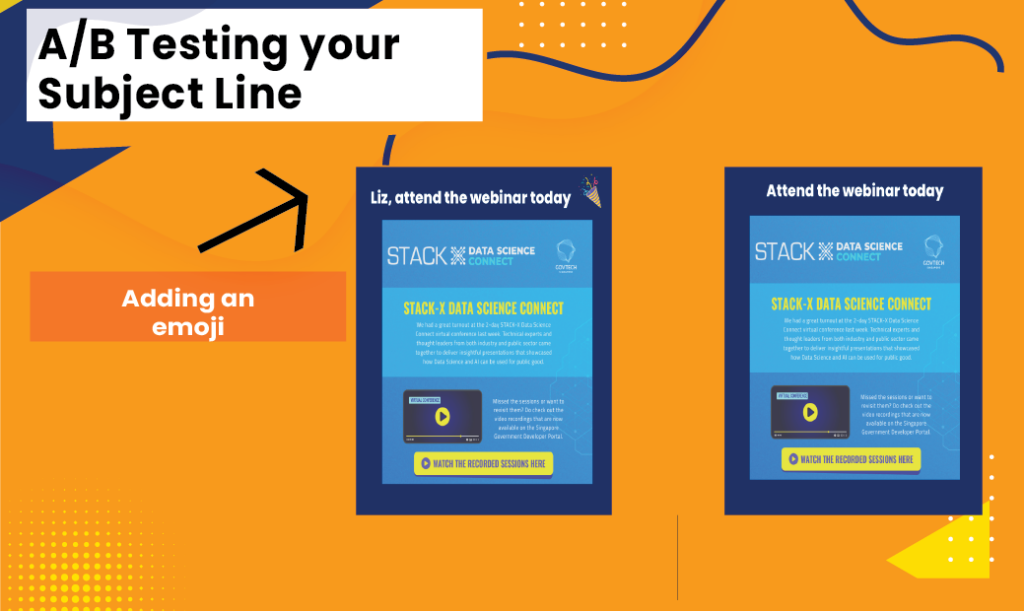

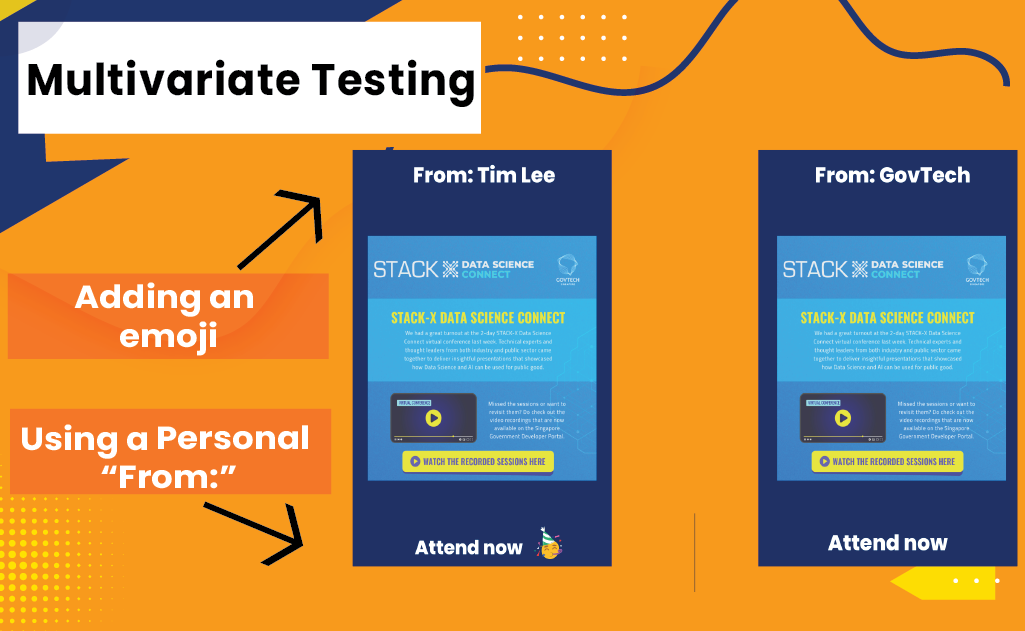

Another test includes the usage of emojis. Do emojis live up to the hype? There’s only one way to find out!

You can also test the placement of your content. For example, would placing the benefit right at the top or at the bottom work better to attract the users’ attention?

Additionally, A/B testing visuals also lets you determine how you would want to design your campaigns in the future. This can be testing the presence of buttons, images, etc!

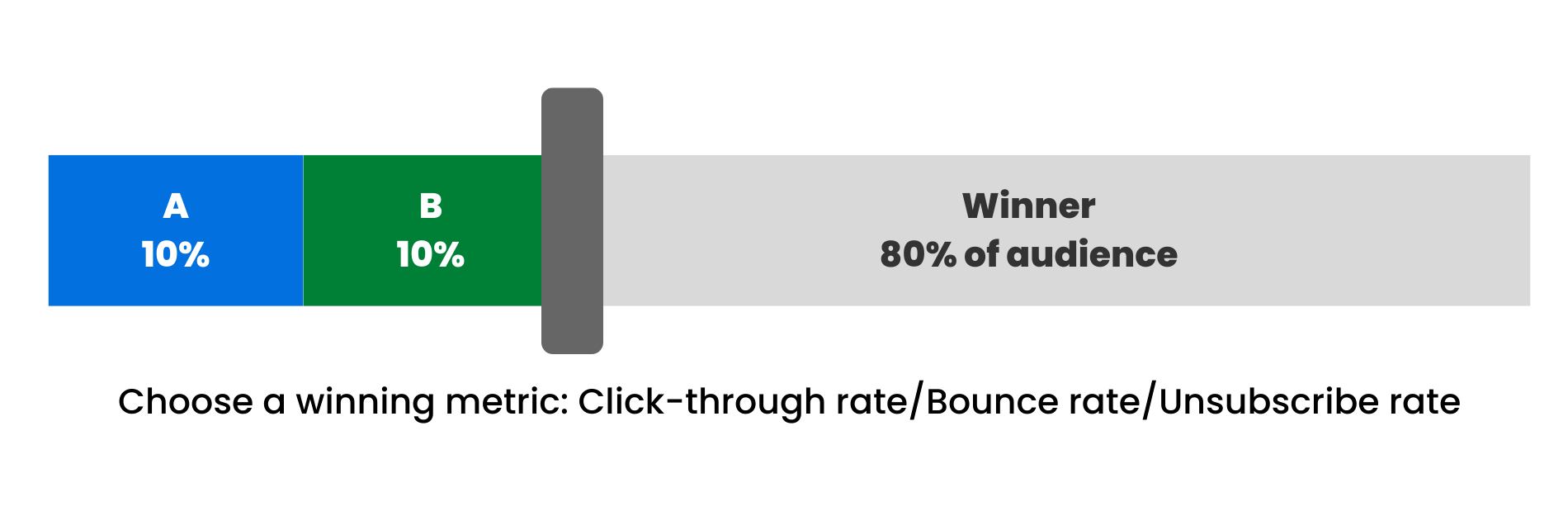

5. Using a representative sample size: In order to get more statistically significant results, you can stick to the 80/20 rule where you